Source_Code

Source_Code

Turn your view into a smart nutrition guide with real-time product info, AI insights, and sustainable choices.

Our Goal

Process and Outcome

Process

First we had to come up with an idea on how to use the feature in a useful way. A lot of different ideas were scrapped until we came across the OpenFoods API. We started by mapping user journeys and UML-diagrams to understand what features our application should include to be as inclusive and easy-to-use as possible. The next step was to divide the team based on strengths. Some were programming, while others were designing and coordinating the team. The first feature we had were working panels which came with the Meta SDK on which we based our many panels on. Weekly meetings with the team and with our supervisor helped move towards our end goal of designing and programming our application. We used provided Meta Quest 3’s to test our program and to minimize errors.

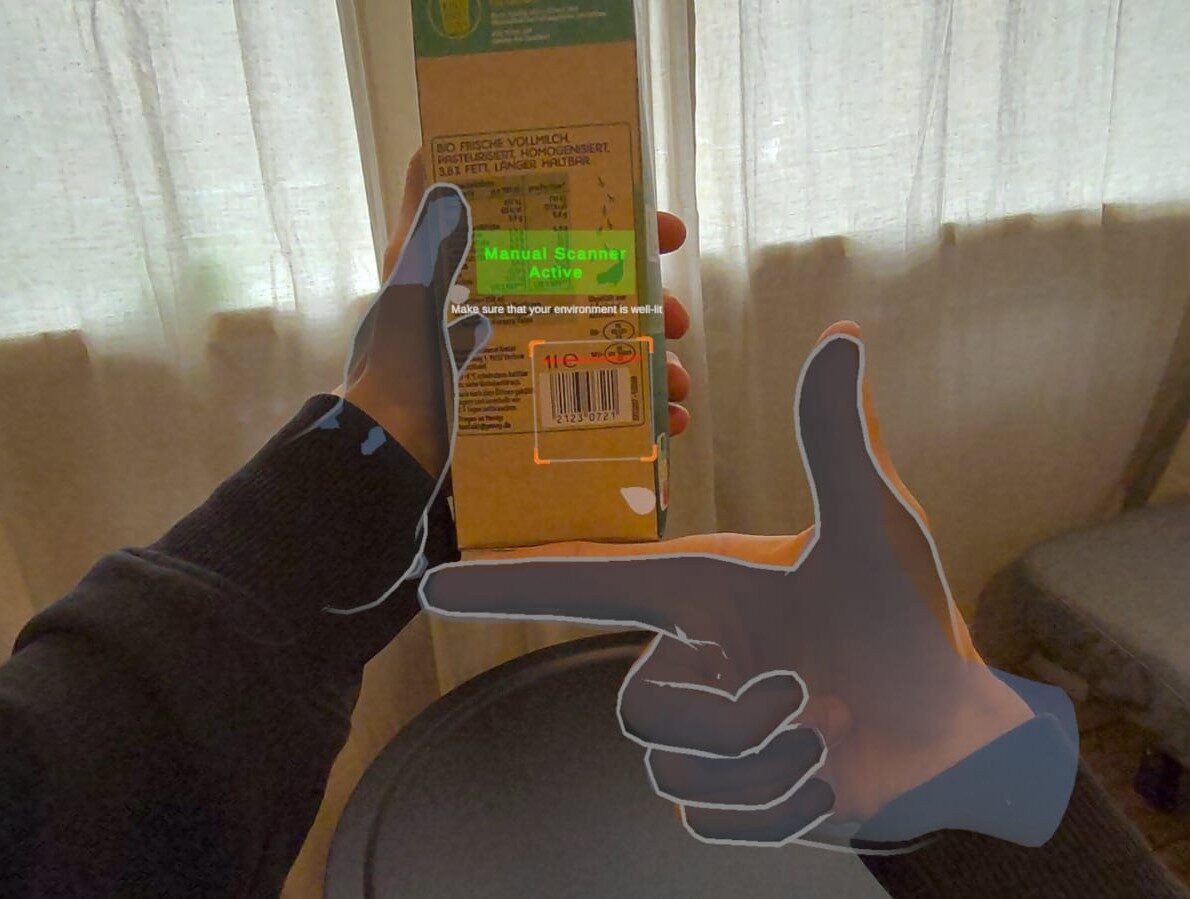

The required hand gesture to manually scan a barcode.

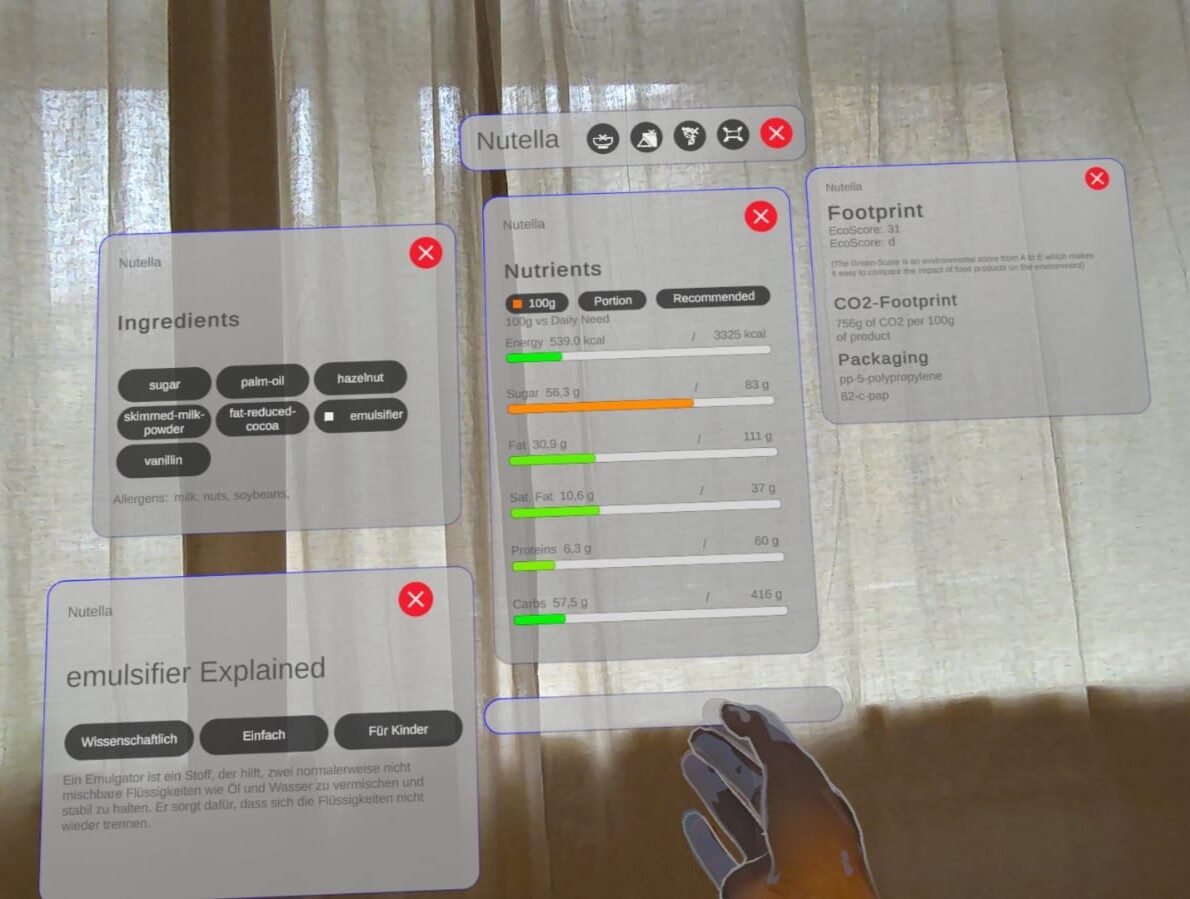

A showcase of panels of a product.

Tools and Ressources

- The application was build on Unity Ver. 6000.0.46f1 with a C# Visual Studio extension.

- To access the Meta Quest 3 we used the Meta XR SDK, which also enabled us to create our own gestures.

- The Open-Food-Facts API is used to get the information about products and their barcode.

- ZXing makes it possible to read barcode.

- „Deutsche Gesellschaft für Ernährung e.V.“ provides the values for the daily allowance of nutrients of different activities, ages and body types.

- The AI-tool is made with Gemini.

Product/Outcome

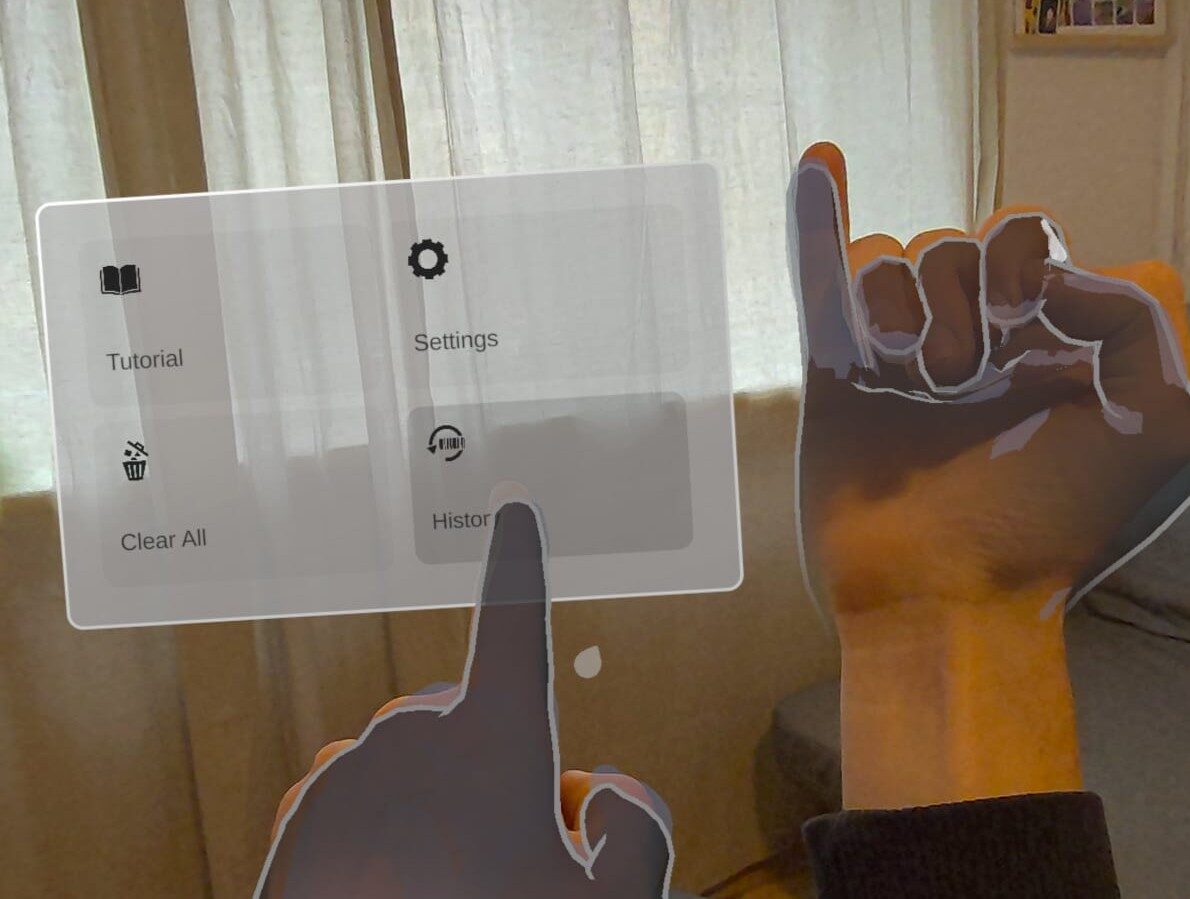

We achieved to build an application for the Meta Quest which uses its Passthrough camera feature. With our program the camera scans product barcodes reliably and generates movable mixed-reality panels that provide information about ingredients, nutritional values and environmental impact. Furthermore, it’s possible to add information about yourself to check how much of your daily nutrition allowance a product includes. If you don’t understand a certain ingredient you can click it to get an explanation in different difficulties from easy to scientific generated by a built-in AI feature. To include first time VR users we included a simple-to-understand tutorial. The design can be changed if you prefer light or dark mode. It is possible to close every panel individually or all at once with a clear-all button.

Team