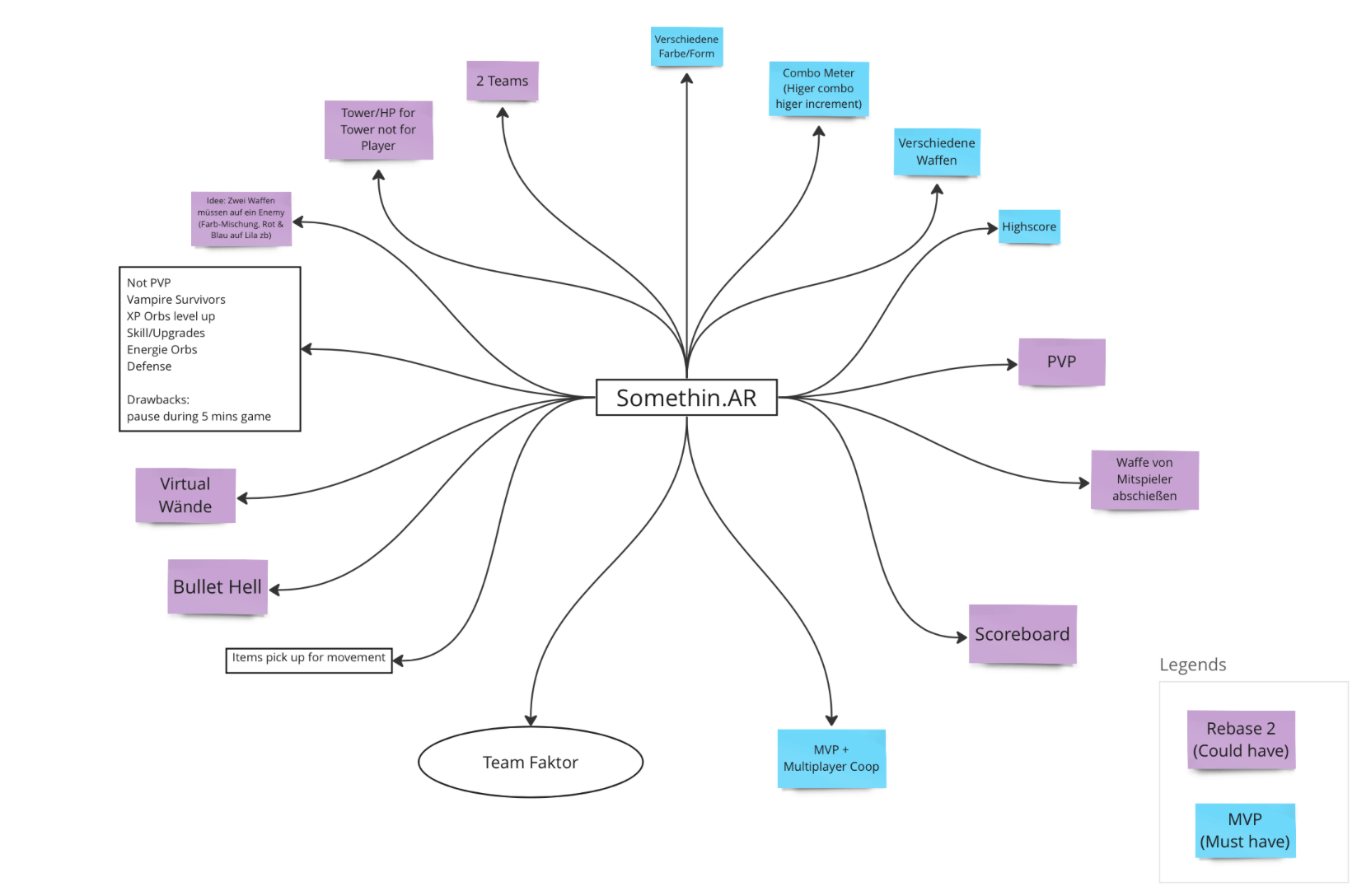

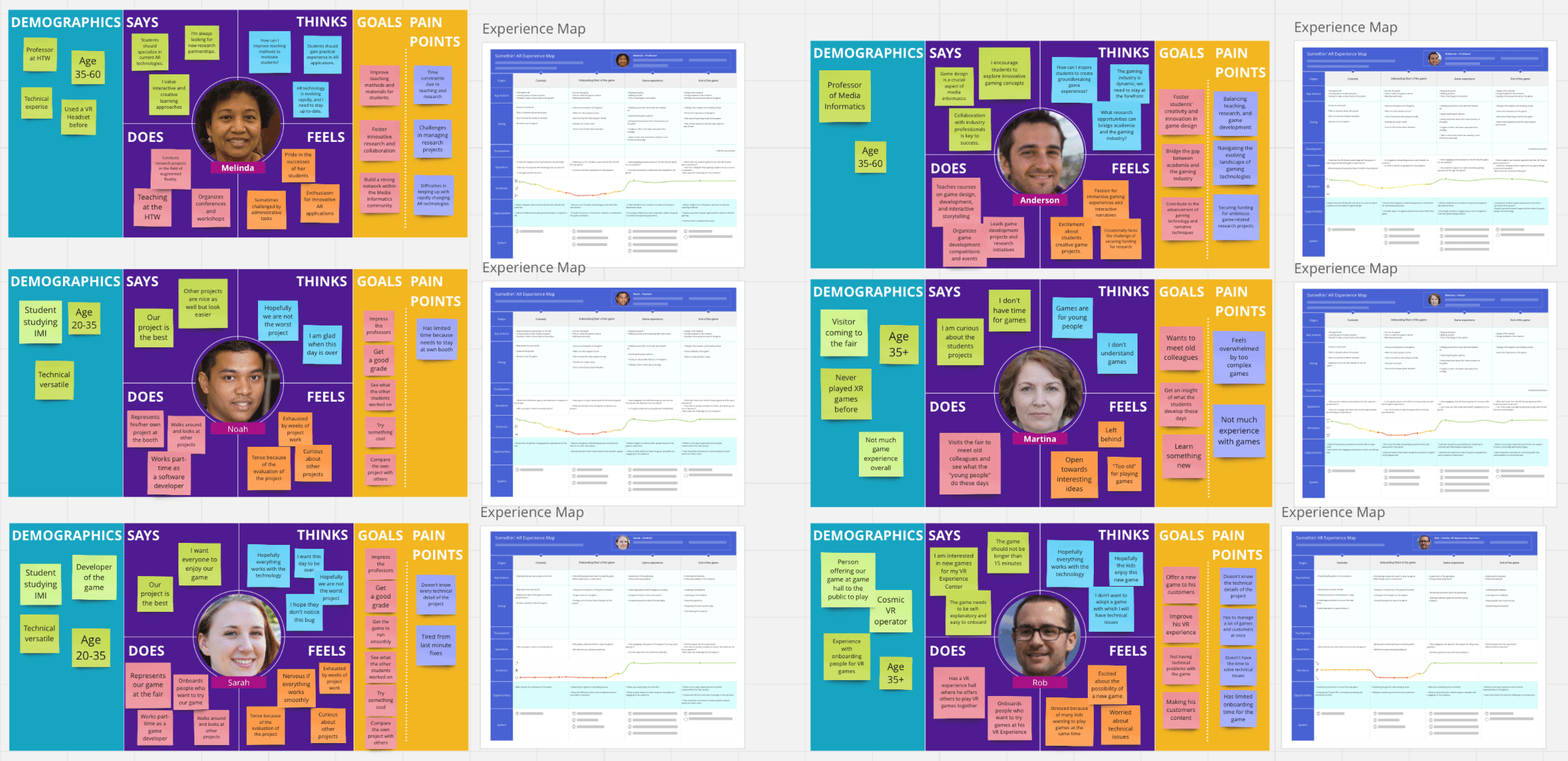

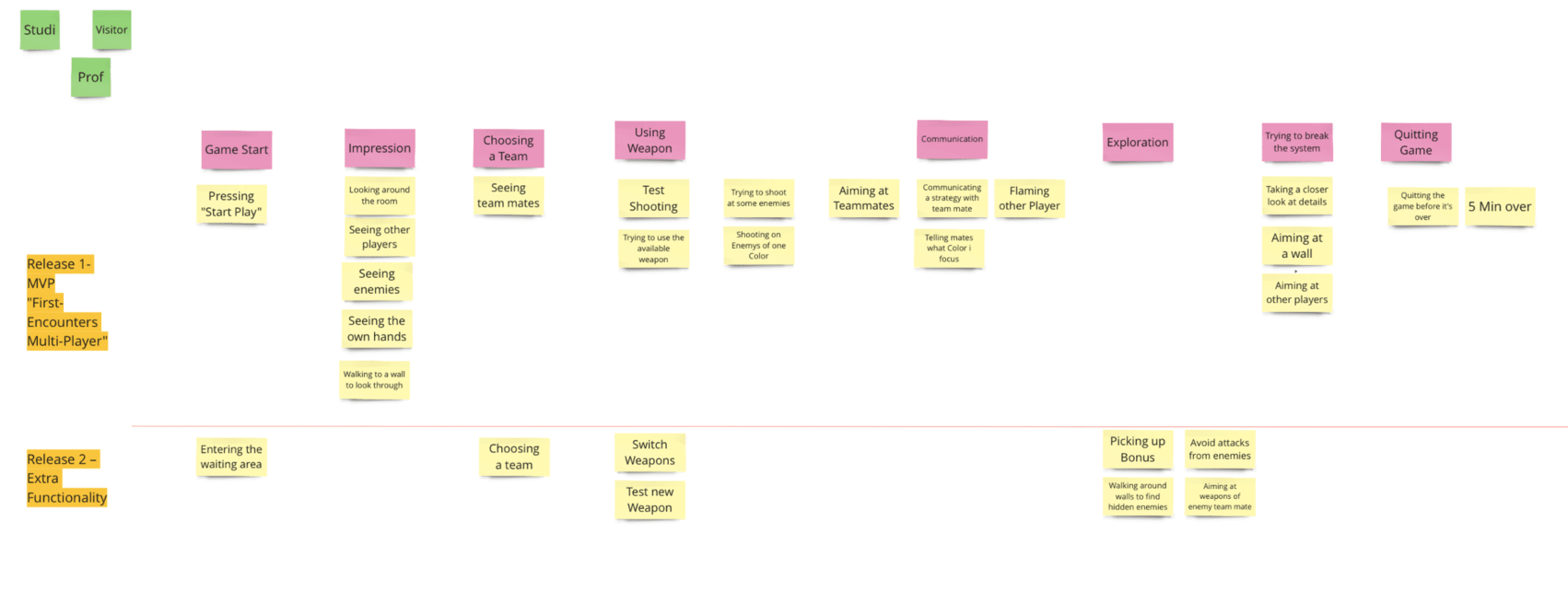

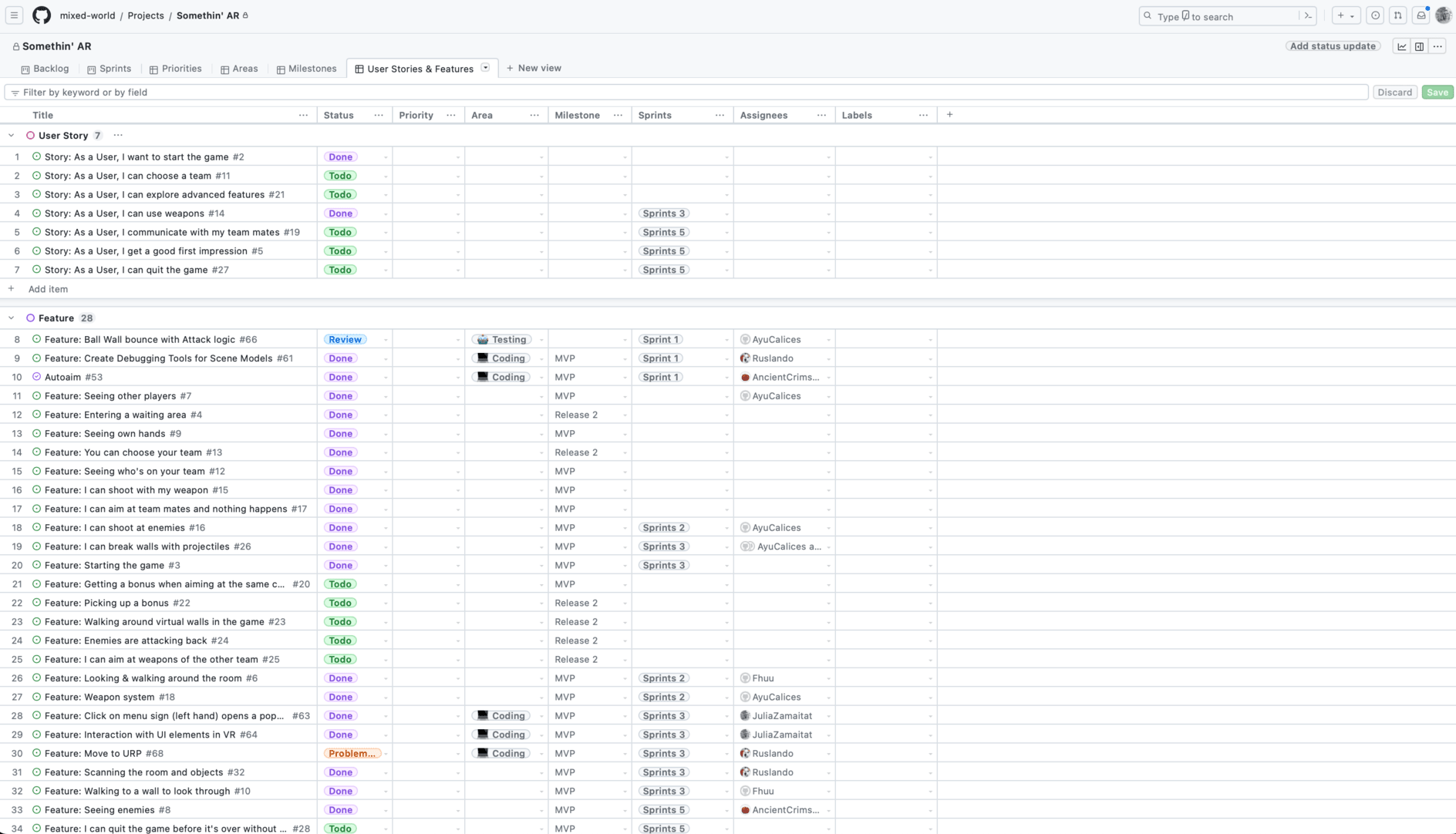

At the beginning of our project, we had a clear goal in mind: to create a game using the Meta Quest 3 headsets provided by HTW, with a focus on multiplayer elements and mixed reality features. To get our project off the ground, we started with some hands-on exploration. We tested various games that were compatible with the Quest 3, and we even took a trip to Cosmic.VR in Berlin, a virtual reality studio where friends can meet and enjoy VR games together.