One of the biggest challenges was wrapping our heads around using an AI API as a new and exciting technology. First in gathering abstract and technical understanding and then not messing up our bank. We had to be careful when training and testing models, executing multiple prompts, and so on, to not cause bankruptcy on our OpenAI wallet. Although having an adequate budget, we managed to not spend millions of tokens through human error.

Another challenge was the import and preparation of factually correct fine-tuning training data, data transformation, as well as ensuring JSON compatibility on various ends. Importing documents to be used as another source of knowledge (besides the AI’s knowledge) also meant that we had to find a way of information extraction. We managed to do this by having OpenAI clean up our text, taking out redundant or irrelevant information like page numbers, and then apply our formatting before embedding for semantic search. Prompt engineering played a big role in this.

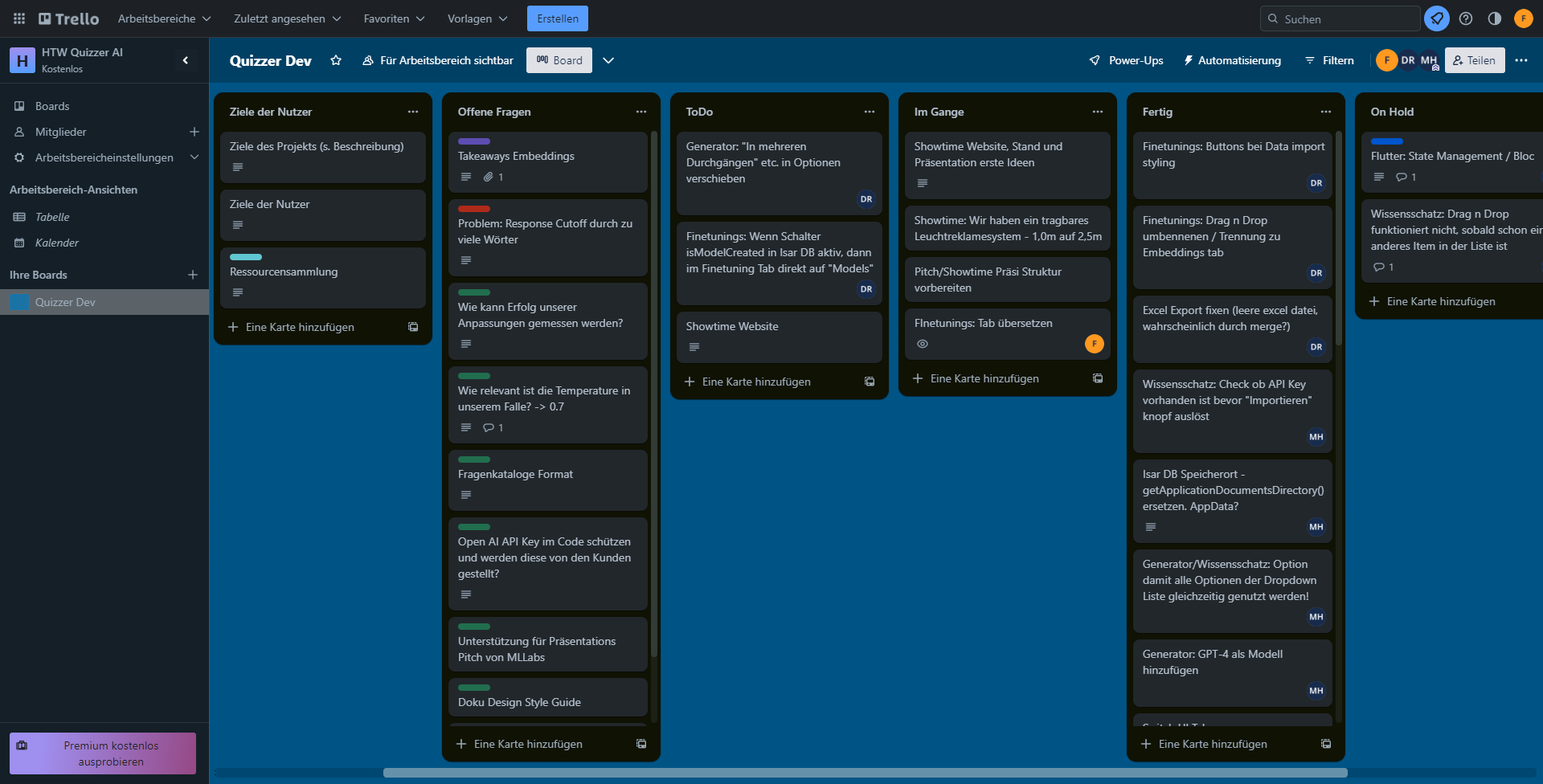

We also continualy refined the UI to make the application more usable overall.

Our result is an easy-to-use Question Generator, with advanced features like the capability of user-supplied knowledge and a custom model specifically made for question generation, ensuring an improved question output.